Was this newsletter forwarded to you? Sign up to get it in your inbox.

Dan Shipper scanned a page from Erik Larson’s Winston Churchill biography, The Splendid and the Vile, and pressed save. The app he was demo-ing identified the book, generated a summary, and produced character breakdowns calibrated to exactly where he was in the story—no spoilers past page 203.

Nobody programmed it to do any of this.

Instead, Dan’s app has a handful of basic tools—“read file,” “write file,” and “search the web”—and an AI agent smart enough to combine them in a way that matches the user’s request. When it generates a summary, for example, that’s the agent deciding on its own to search the web, pull in relevant information, and write a file that the app displays.

This is what we call agent-native architecture—or, in Dan’s shorthand, “Claude Code in a trench coat.” On the surface, it looks like regular software, but instead of pre-written code dictating every move the software makes, each interaction routes to an underlying agent that figures out what to do. There’s still code involved—it makes up the interface and defines the tools that are available to the agent. But the agent decides which tools to use and when, combining them in ways the developer never explicitly programmed.

At our first Agent Native Camp, Dan and the general managers of our software products Cora, Sparkle, and Monologue shared how they’re each building in light of this fundamental shift. They’re working at different scales and with different constraints, so they’re drawing the lines in different places. Here’s what they shared about how the architecture works, what it looks like in production, and what goes wrong when you get it right.

Key takeaways

- The AI is the app. Instead of coding every feature, you define a few simple tools the AI is allowed to use—for instance, read a file, write a file, and search the web. When you ask it to do something, it decides on its own which tools to reach for and how to combine them.

- Simpler tools get smarter results. The smaller and more basic you make each tool, the more creatively the AI combines them. Claude Code is powerful because its core tool—running terminal commands—can do almost anything.

- Rules belong in the tools, not the instructions. You can ask an AI to be careful, but it might ignore you. If an action is irreversible—like deleting files—the safeguard has to be built into the tool itself.

- You don’t have to start over to start learning. Give the AI a safe space to interact with your existing app and experiment outside the live product. You’ll learn what the agent needs without risking what already works. Just don’t get attached to the code—as models improve, expect to throw things out and rebuild every few months.

The app for people who actually do what they said they’d do

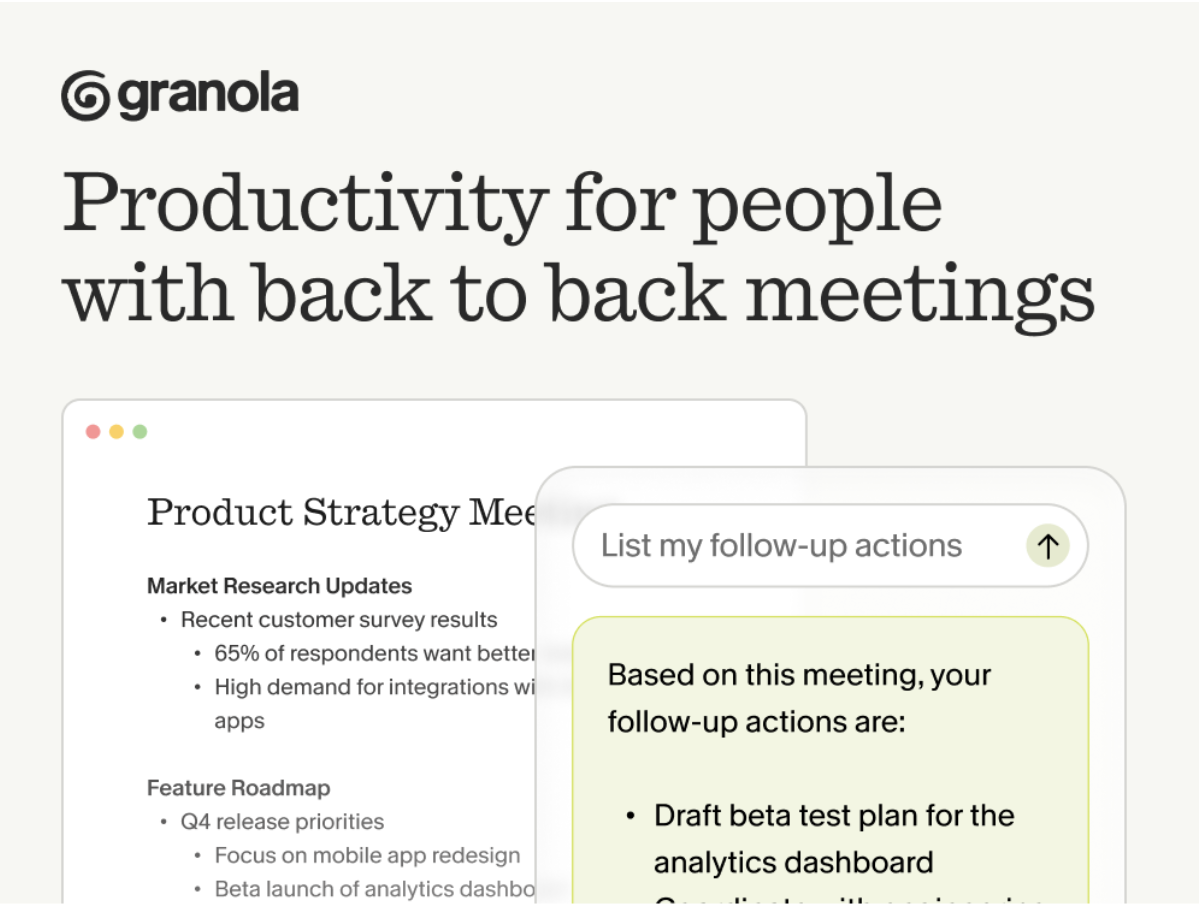

You know that feeling when you leave a meeting and immediately forget half of what you agreed to? That’s not a memory problem. It’s a meetings problem. When you’re back-to-back all day, there’s no time to process. No time to write the follow-up. No time to turn “we should probably...” into something that actually happens.

Granola helps you become the person who actually does what they said they’d do. You take notes during the meeting : just quick bullets, nothing formal. Granola transcribes in the background and turns those notes into clear summaries with actual next steps. After the call, you can share your notes with the team so everyone’s aligned. Or chat with them to pull out exactly what you need to do next, without re-reading the whole thing. No more “wait, what did we decide?” moments. No more dropping the ball because you had three calls in a row and couldn’t keep track. Just clarity. And follow-through.

Download Granola and try it on your next meeting.

How agent-native works

Traditional software can only do what it’s explicitly programmed to do by its code. Click “sort by date,” and it sorts by date. Click “export,” and you get a CSV. It will never spontaneously summarize your inbox or reorganize your files by topic—unless someone wrote the code for that exact feature.

Instead of coded features, an agent-native app has tools (small, discrete actions like “read file” or “delete item”) and skills (instructions written in plain English that describe how to combine those tools). An agent uses those tools and skills to produce an outcome that you specify, such as identifying what book you are reading from one page.

Three principles make this work:

Become a paid subscriber to Every to unlock this piece and learn about:

- Why Yash’s AI agent went into “god mode”—and why rewriting the prompt didn’t stop it

- Why the most radical agent-native architecture looks like it was built in 1995

- A simple test for whether you’ve built an agent-native app, or just a chatbot with extra steps

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Front-row access to the future of AI

Front-row access to the future of AI

In-depth reviews of new models on release day

In-depth reviews of new models on release day

Playbooks and guides for putting AI to work

Playbooks and guides for putting AI to work

Prompts and use cases for builders

Prompts and use cases for builders

Bundle of AI software

Bundle of AI software