Was this newsletter forwarded to you? Sign up to get it in your inbox.

Grok 4 is topping some big AI benchmarks. So why have the responses to it been so mixed? And how come Every’s engineers aren’t using it much?

xAI’s latest launch, Grok 4, is positioned as an LLM with advanced reasoning capabilities. The model debuted last week in a livestream featuring Elon Musk and other members of the xAI team seated on black sofas and pointing at graphs that seemed to indicate Grok 4’s superior performance on prominent benchmarks like Humanity’s Last Exam and ARC-AGI.

But the TL;DR from our Studio team is this: Grok 4 is smart, but seems overtrained on benchmarks—while not being useful enough to be a go-to for everyday tasks. It should be good at coding, but without its own built-in command-line interface (CLI), the barrier to trying it is high. (A CLI is a text-based interface where developers type instructions directly to the model, without needing to switch between apps or windows.)

“There are new competitive dynamics here—Claude Code [which has its own CLI] is sticky,” Every CEO and cofounder Dan Shipper says.

Here’s what’s new, what the team thinks, and what everyone else thinks.

The nuts and bolts of Grok 4

Grok 4 is a reasoning model where you can’t see the reasoning tokens or turn the reasoning mode off. In other words, it always thinks deeply before answering, but won’t show you how it got there or let you stop it from thinking so deeply.

xAI trained the model through reinforcement learning tailored to increase its reasoning capabilities—and as a result, Grok 4 is touted to excel in technical domains like math and physics. It accepts both images and text prompts and has a context window of 256,000 tokens, double that of its predecessor, Grok 3, and more than both OpenAI’s o3 and Claude Opus 4, which are currently capped at 200,000 tokens.

The launch also included Grok 4 Heavy, described as Grok 4’s more powerful version. While explaining how it worked, Musk said it “spawns multiple [Grok 4] agents in parallel,” and then they compare their work “like a study group” to find the best answer.

The models are available to consumers through two subscription plans: the “SuperGrok” plan at $30 per month, or the “SuperGrok Heavy” plan at $300 per month, which includes access to Grok 4 Heavy. For developers, Grok 4 matches the cost of Anthropic's Claude Sonnet 4: $3 per million input tokens and $15 per million output tokens.

When a model gets the answer right but misses the point

Grok 4 should, in theory, excel at coding tasks thanks to its reasoning-first training. But early signals suggest that it’s been overfitted to do well on benchmarks—or to correctly answer what writer Zvi Mowshowitz calls “exam-shaped questions.” Physicist Casey Handmer asked Grok four questions where the process of answering mattered more than the result, and found that the model did not perform very well. “Grok 4 is routinely nailing Physics Olympiad style problems,” Handmer tweeted, “and yet it seems to still be missing the core of insight which is so critical to physics.”

Make email your superpower

Not all emails are created equal—so why does our inbox treat them all the same? Cora is the most human way to email, turning your inbox into a story so you can focus on what matters and getting stuff done instead of on managing your inbox. Cora drafts responses to emails you need to respond to and briefs the rest.

This leaves Grok 4 seeming more useful than it actually is in the real world. Its lack of tooling adds to the friction: Grok 4 doesn’t come with a built-in CLI, so using it takes more setup—unless you go through a third-party tool like the AI code editor Cursor. (Most of the Every team has moved away from Cursor, since Claude Code is now more tightly integrated into the day-to-day workflows.)

And then there are the safety issues associated with xAI’s models. Writer Simon Willison found that Grok 4 appeared to reference Elon Musk’s views when responding to controversial questions. An Anthropic researcher openly criticized xAI for not releasing any documentation of its safety testing, a standard practice in the industry. Grok 3 also showered praise on Adolf Hitler earlier this month, and though xAI issued a statement of apology, this kind of behavior from a frontier model does little to build trust.

What everyone at Every is thinking…

Here’s how Grok 4 performs on some of the benchmarks the Every team uses internally to evaluate new models:

Diplomacy: Top-level performance

“Grok 4 is o3-level in Diplomacy… that’s to say S-tier, a step above all others.”—Alex Duffy, head of AI training at Every, on the new model’s performance on the benchmark he co-created: evaluating LLMs through their performance on the complex strategy game Diplomacy

Reader engagement test: Still behind Claude

“Ran the same ‘reader engagement check’ we've tested the last few frontier models on with Grok 4, [it] does as well as [OpenAI’s] o3 does, which is worse than Claude Opus 4.

The reader engagement check is a prompt we've used to have an LLM read some writing (specifically tweets so far) and then see if the piece of writing keeps a reader engaged as [they] go from sentence to sentence. We’ve found that Claude Opus 4 is the only model that is able to do it reliably.”—Danny Aziz, general manager of Spiral

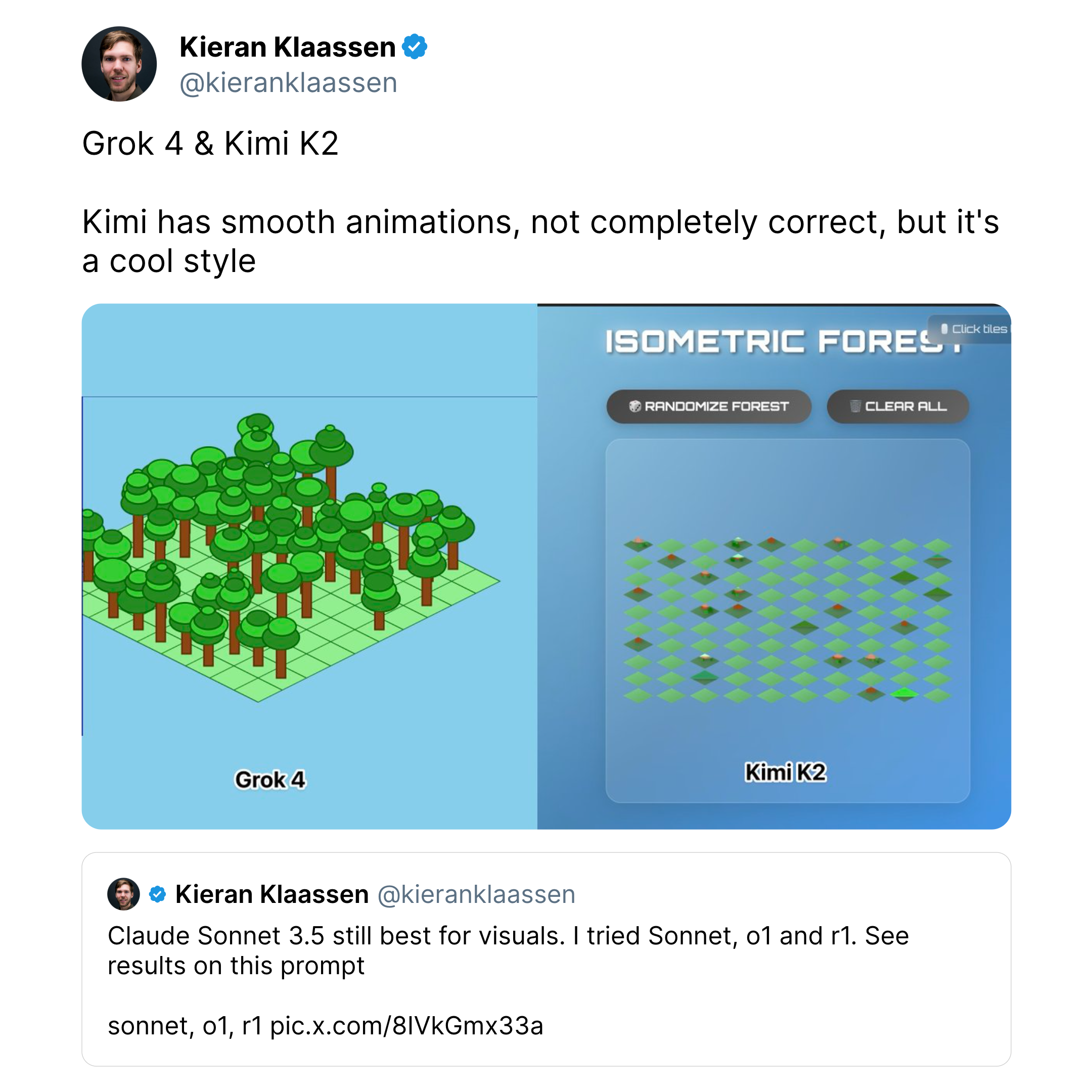

Visual generation prompt: Grok edges out new open-source model Kimi

“Grok generated something good looking. [New open-source model] Kimi [did] too, [but] less correct than Grok.”—Cora general manager Kieran Klassen, comparing Grok and Kimi K2’s performance on his trademark test of prompting new models to generate an image of a specific kind of forest

“Grok 4 is kind of slow.”—Kieran, a few minutes after tweeting about the image Grok created

And a few more thoughts from Every about Grok 4:

Become a paid subscriber to Every to unlock the rest of this piece and learn about:

- The nuances of Grok 4's performance from the perspective of the Every team

- First impressions from leading thinkers in AI

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Front-row access to the future of AI

Front-row access to the future of AI

In-depth reviews of new models on release day

In-depth reviews of new models on release day

Playbooks and guides for putting AI to work

Playbooks and guides for putting AI to work

Prompts and use cases for builders

Prompts and use cases for builders

Bundle of AI software

Bundle of AI software

.png)