As a reminder, I’m Eugene Wei, a former product guy at companies like Amazon, Hulu, Flipboard, and Oculus, and I’ve kept a personal blog Remains of the Day since 2001, offering what I describe as “Bespoke observations, 80% fat-free”, though the perceived fat ratio will vary depending on your tastes in technology, media, and all the other random topics I cover.

|

I was flipping through photos from the five weeks I spent in Italy in 2015. It feels like gazing through a tunnel in time, to another age, when I was living through what felt like peak travel and didn’t even know it.

|

One night I walked into a town square on the Amalfi Coast, people were gathered everywhere, only an occasional pint-sized Italian car or scooter parting the groups of people wandering, loitering. It felt like wandering into a Fellini film.

I’ve flown out of the country every year this decade. I’d guess my odds of flying overseas at any point this year to be < 5%.

High-Intensity Parenting

Since my last issue, weeks ago now, I was summoned into emergency childcare duty by my sister in Oakland. She has three kids, aged 6, 4, and 1, and she’s, for now, a full-time work-from-home mom. Her husband is an ER doc and often works night shifts.

Next to them, I have nothing to complain about from having to shelter in place, something I got an up-close-and-personal taste of while trying to entertain, feed, and homeschool my nieces while preventing my toddler of a nephew from eating something toxic or tumbling down a stairwell anytime he waddled out of my view.

What is that saying about no deficit hawks in a depression? Let’s just say I’ve discovered that I’m from the MMT school of screen time. Print more screen time! These limits are all arbitrary! [The only drawback to my policy is weaning the little ones off their iPad when it’s time to eat or sleep. It’s like trying to floss a great white shark. I guess this is the equivalent of the fears about policies like UBI, though the screen addiction fear is empirically undeniable.]

This meme going around about the difference in the pandemic experience of single people and parents is so real to me right now. Just know that if you’re a single person complaining about boredom or horniness or procuring flour, or if you’re a single person hailing the productivity boost of working from home full-time, somewhere a parent stuck at home is flipping you off (with a child in the other arm).

|

[Single person (left) complaining to a parent (right) about how they’re getting bored of attending Zoom parties during shelter-in-place]

Raising children is hard enough, but in the U.S. we play this game on the highest difficulty level, the “atomic family structure” setting. The wealthy pay for childcare (the services architecture model of child-rearing), but a pandemic is forcing some of them to try the game at expert difficulty level also.

My sister, her husband, and I resort to a sort of tag-team model, where each of us takes the kids for a few hours at a time until we can take no more, and then we tap one of the other adults in. It’s like parenting high-intensity interval training.

Over the past few years, I’ve become more bearish about any easy pro-natalist prescriptions. By all accounts, parenting is costlier now than at any other point in history while the benefits have remained largely the same. This is not in any way to question the joy that children bring, just a skepticism that children today are more of a treasure than in times past, especially in a former era when they were your farmhands.

Higher education as a financially lucrative status-signaling mechanism increased the hours parents spent shuttling children around to a variety of activities; the investment of parental time per hour has increased in this age even as more parents have taken on full-time jobs. While that trend could be reversed in any number of ways, including a shift in norms, many of the other underlying factors behind the decline in birth rate seem irreversible.

We are unlikely to see a reversal in the decline in child mortality, which led women in the past to have more children. We are unlikely to return to an agrarian economy, where children were the first line of labor to help you operate your farm. We are unlikely to reverse women’s increased participation in the workforce, which pushed children off into the future, or permanently, for some. The opportunity cost for women of having children has risen over the years, and that’s a good thing. We’re unlikely to reduce the use of birth control, at least at a global level.

Three factors that could be reversed are the rising costs of healthcare education, and housing, though such developments seem more likely to arise from some crisis than through any direct intervention. And, through advances in medicine, we might someday lower the childbearing cost on women (increased surrogacy or the continued advances in artificial wombs, to take two examples).

In the near-term, some return to a more communal model of raising children seems the most feasible medium-term solution. Think of it as the zone defense style of parenting. Look, it’s hard to watch The Last Dance without realizing that the modern NBA game, where zone defense is legal, is more watchable than the basketball of bygone days. In the same way, communal childcare is likely a net gain for everyone over the isolation game style. Even pre-pandemic, I was hearing of more and more distributed daycare models. The same may come for homeschooling if a market develops to price out the latent demand.

Anyhow, shout out to all the parents out there sheltered in place around the clock, especially the mothers on this day, and especially those with children at that age where deep willfulness and an underdeveloped sense of other people’s state of mind intersect in a Molotov cocktail of despotism and anarchy. Being around my nieces at their young age is a deep reminder that much of maturing into an adult is developing emotional shock absorbers to dampen the inevitable swings in the course of an average day. It’s no fault of them or any of the children in the world that they’re having to live through a pandemic, but that makes it no less of Sisyphean ordeal for their parents.

This issue is a series of odds and ends related, as always, though, given current circumstances, many relate to the invisible elephant in the room, SARS-Cov-2. Turns out I exceeded the max length for a newsletter so I will put the rest in an issue for tomorrow or the day after.

Herd Periodicity

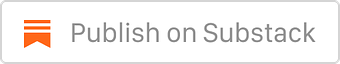

In my last issue, I asked people if they could guess what Google Trend term this graph depicted.

Two people emailed me the correct answer: “porn.”

I bring this up because it’s amusing in a Beavis and Butthead way, yes, but more as an example of emergent periodicity. Especially if you’re a consumer product manager or investor, every Google Trend graph that displays periodicity feels like a map to the most deep-rooted architectural patterns in our psyches, like that maze image in Westworld.

In some ways, the most attractive bets for an investor are those companies attempting to vary a business model while holding human behavior stable. Sometimes both a new way of doing something and a new human desire converge (typically some form of rising consumer expectation as societies and economies scale up Maslow’s hierarchy), but in general people are remarkably consistent in nature. Our biological and social operating systems evolve on more geologic timescales. For example, people sometimes ask me if we will ever stop being status-seeking monkeys, and I always answer that it’s possible, but we’ll all be long dead before that happens.

The TV shows Devs and Westworld both ponder the possibility of a computer that can, with amazing accuracy, model and thus predict human behavior. The premise is implausible even as it makes for a great high concept sci-fi pitch. However, at the herd level, some forms of human behavior are indeed as predictable as clockwork. Examples of such emergent periodicity in human behavior are everywhere if you know where to look. Google Trends is a handy source for the masses, but if you work at a company with a lot of customers, your dashboards are likely full of them.

Any individual might be unique for any number of reasons, but as a group, humans distribute normally time and again.

|

Look at a search for any diet and you’ll see this seasonal shape, a map of the tides of human willpower, waxing and waning, with a spike of guilt at the start of the new year after a holiday season of gluttony.

|

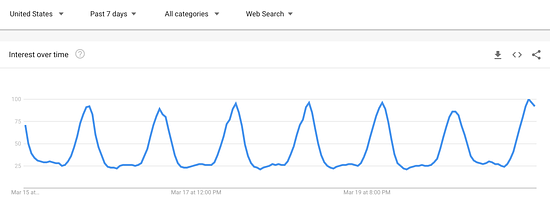

A graph of searches for or any education-related platform or tool will track the school calendar.

|

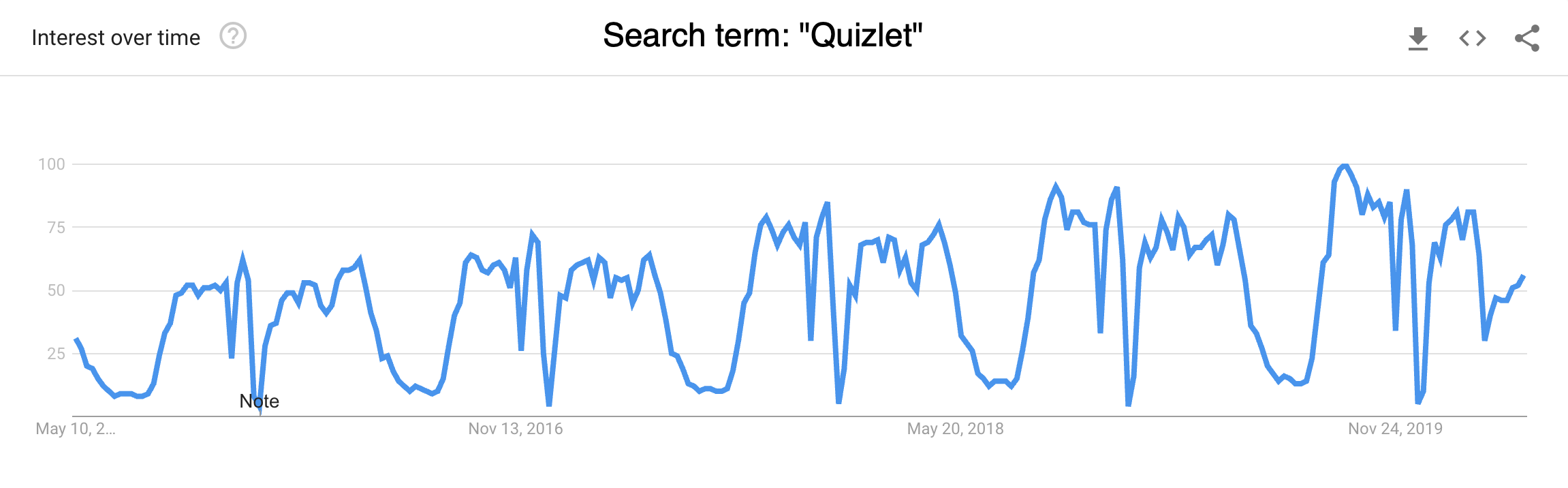

Search volume for “wedding venues” also has a distinctive annual seasonality. At the start of a new year, at the same time as people are searching for diet-related terms, many (newly?) engaged couples begin to hunt for a place to host their nuptials. July sees a mid-season spike in searches as well, and early to mid-December is the trough.

The daily periodicity of searches for “porn” are somewhat curious. You’d expect that searches wouldn’t peak during work hours, but 1am PT and 4am ET in the US is so consistently the peak that I wonder if there’s some hormonal or sociological correlation. The cure for insomnia?

The other reason I bring up this graph, though, is pandemic-related. It has to do with the nature of prediction, and how we plan for probabilistic outcomes.

Predictable surprise

“I am shocked—shocked—to find that gambling is going on in here!” Captain Louis Renault, Casablanca

Greek myths are full of oracles whose divinations fall on deaf ears, so much so that the term Cassandra has become the canonical term for just such a prophet. She was the daughter of Priam, King of Troy. The god Apollo had the hots for her and wooed her with the gift of prophecy, but when she turned him down, he laid a curse on her so that no one would ever believe her. Cassandra, as the woman who’s always right but no one listens to, should be the patron saint of #believewomen.

Here’s an example of another ignored prophet, from Emily Wilson’s marvelous translation of The Odyssey:

Then Zeus, whose voice resounds around the world,

sent down two eagles from the mountain peak.

At first they hovered on the breath of wind,

close by each other, balanced on their wings. 150

Reaching the noisy middle of the crowd,

they wheeled and whirred and flapped their mighty wings,

swooping at each man’s head with eyes like death,

and with their talons ripped each face and neck.

Then to the right they flew, across the town.

Everyone was astonished at the sight;

they wondered in their hearts what this could mean.

Old Halitherses, son of Mastor, spoke.

More than the other elders, this old leader

excelled at prophecy and knew the birds.160

He gave them good advice.“Now Ithacans,

listen! I speak especially for the suitors.

Disaster rolls their way! Odysseus

will not be absent from his friends for long;

already he is near and sows the seeds

of death for all of them, and more disaster

for many others in bright Ithaca.

We have to form a plan to make them stop.

That would be best for them as well by far.

I am experienced at prophecy; 170

my words came true for him, that mastermind,

Odysseus. I told him when he left

for Troy with all the Argives, he would suffer

most terribly, and all his men would die,

but in the twentieth year he would come home,

unrecognized. Now it is coming true.”Eurymachus, the son of Polybus,

replied, “Old man, be off! Go home and spout

your portents to your children, or it will

be worse for them. But I can read these omens 180

better than you can. Many birds go flying

in sunlight, and not all are meaningful.”

I’ve always found the recurrence of the Cassandra trope in Greek mythology dubious, but this pandemic has me reconsidering its credibility. All throughout history, humans have ignored credible warnings from domain experts.

In 2018, a team at Johns Hopkins wrote a report titled The Characteristics of Pandemic Pathogens (PDF). They reviewed the literature and interviewed experts in the field to try to determine what was most likely to cause the next global catastrophic biological risk.

It feels like an admonition, a slap in the face, how accurately it describes what we’re going through right now. The conclusion of the report was that the most likely source of the next pandemic would be a virus rather than a bacteria, prion, or protozoa. Most likely an RNA virus, one that would spread asymptomatically from human to human, via respiration, and in the worst case, one that could move between animals and humans. The two classes of virus that were obvious suspects given that checklist influenzas and coronaviruses.

Furthermore, the report predicted that human errors, such as judgments made for political reasons, could exacerbate the impact of such a crisis, exposing inadequate supplies of ventilators and ICU’s in hospitals. Supply chains would break under the surge of cases.

That’s just one of many reports which foresaw the current pandemic. But, even given that, one could argue that many predictions never come to pass. How should we judge leaders who are warned all the time of all sorts of possible calamities?

As a general principle, I agree. To judge our leaders with the smug certainty of hindsight while ignoring the informational fog in which they make their decisions is some form of fundamental attribution error. Informational noise is real.

However, in this case, I’m less sympathetic. For one thing, it wasn’t just that so many reports predicted the next pandemic but that so many agreed on exactly the type of outbreak that would be most challenging and exactly which institutions and infrastructure would break.

Furthermore, the responsibility of leaders is not to just prioritize what is urgent or salient but to help the team focus on what is important. Long-term decision-making is exactly the type of thing a leader, with fewer day-to-day responsibilities and a broader set of inputs, should spend some of their bandwidth on.

Scott Alexander notes in “A Failure, but not of Prediction” that humans tend to be more Goofus than Gallant.

|

We do, as a species, seem to prefer deterministic rather than probabilistic thinking. If the weatherman says there’s a 10% of rain and we are caught in the rain without an umbrella the next day, we curse out the weatherman.

Even for those who don’t like to gamble, I recommend playing some Blackjack at a casino, just to test one’s ability to think clearly through emotional highs and lows when you have skin in the game. If you play perfect strategy, and even if you count cards, you still may end up down. Do people take bad beats in stride? Can they separate process from outcome?

In Andromeda Strain (a recent pandemic re-read), Michael Crichton cites Montaigne as saying, “Men under stress are fools, and fool themselves.” I couldn’t find any direct attribution of Montaigne saying such a thing but it’s wise whatever the providence.

I like the term Joshua Gans assigns this pandemic: predictable surprise.

Humans are also terrible at events that happen, well, once in an average lifetime. To some extent, that makes sense. Evolved a cognitive defense against a threat that may occur once in your life would be a costly allocation of resources when we were encountering more imminent mortal threats every day.

Maurice Hilleman, the relatively unknown scientist “who saved more lives than all other scientists combined” (source: Vaccinated) through the nine vaccines he developed, observed two interesting patterns in studying the history of influenza pandemics.

H2 virus caused the pandemic of 1889.

H3 virus caused the pandemic of 1900.

H1 virus caused the pandemic of 1918.

H2 virus caused the pandemic of 1957.

H3 virus caused the pandemic of 1968.

H1 virus caused the pandemic of 1986.

First, the types of hemagglutinins occurred in order: H2, H3, H1, H2, H3, H1. Second, the intervals between pandemics of the same type were always sixty-eight years—not approximately sixty-eight years, but exactly sixty-eight years.

He had a theory to explain the 68-year periodicity and a prediction.

Sixty-eight years was just enough time for an entire generation of people to be born, grow up, and die. “This is the length of the contemporary human life span,” said Hilleman. “A sixty-eight-year recurrence restriction, if real, would suggest that there may need to be a sufficient subsidence of host immunity before a past virus can regain access and become established as a new human influenza virus in the population.” Using this logic, Hilleman predicted that an H2 virus, similar to the ones that had caused disease in 1889 and 1957, would cause the next pandemic—a pandemic that would begin in 2025.

In fact, H1 influenza jumped the queue with the H1N1 pandemic of 2009. Increased urbanization and the encroachment of man into animal habitats may accelerate the cycle. At any rate, if we make it through the current pandemic, watch out for H2 to make a comeback in 2025.

For much of human history, the average person didn’t even live 68 years. I’ve never lived through a pandemic that actually affected my day-to-day life; I remember SARS and H1N1, but it didn’t rearrange my life like SARS-CoV-2. So, for me, this literally is a once-in-my-lifetime (thus far) event.

Our lizard brains have elaborate fight-or-flight responses to immediate threats, and nearly no strong reaction to once-in-a-lifetime events that are so low probability that they might not even occur in our life (the exception is death, the once-in-a-lifetime event that we’ll all experience).

Still, low probability events do occur with high probability, and with enough time and enough trials, they’re effectively inevitable. A single accurate prediction may be impossible even in a deterministic setting (sorry Westworld and Devs, I still find that idea absurd), but many outcomes are predictable even in a probabilistic context. That this pandemic would be SARS-Cov-2 specifically was not predictable, but that a pandemic of this type would occur at some point was. SARS-CoV-2’s severity of impact on the global economy may be black swan in nature, but the arrival of yet another pandemic is not. In fact, it’s inevitable that more pandemics will occur in our future. Fool me once, shame on me, fool me every 68 years or so, shame on me, if I’m still alive.

That old saying about the frog boiling to death from water heated slowly? It impugns frogs, and not just because it’s false. But humans, with brains over 10,000X the weight of a frog, will let themselves be boiled to death over a long enough period of time; that’s almost literally just the definition of global warming. But if you don’t believe in that, at least we now have proof that some portion of us will bathe in a viral soup, screaming the whole time that it’s just a flu bath.

In the end, the Cassandra trope is almost self-referential. A circular loop of oratic tragedy, prophesizing its own recurrence. That is, the only thing that we should believe is that we’ll continue to not believe.

As Hegel said, “We learn from history that we do not learn from history.”

Complex systems run in degraded mode

One of my favorite points from How Complex Systems Fail (PDF) is the fifth one.

Complex systems run in degraded mode.

A corollary to the preceding point is that complex systems run as broken systems. The system continues to function because it contains so many redundancies and because people can make it function, despite the presence of many flaws. After accident reviews nearly always note that the system has a history of prior ‘proto-accidents’ that nearly generated catastrophe. Arguments that these degraded conditions should have been recognized before the overt accident are usually predicated on naïve notions of system performance. System operations are dynamic, with components (organizational, human, technical) failing and being replaced continuously.

Cousin concepts I return to again and again are Buffett’s idea that “when a management with a reputation for brilliance gets hooked up with a business with a reputation for bad economics, it’s the reputation of the business that remains intact” and Marc Andreessen’s “The only thing that matters is product-market fit.”

An under-examined implication of these sayings is that a poorly run business with a great business model can flourish for long stretches in a degraded state. Or, a business might be terribly run but financially great. These are the types of idiot-proof franchises Buffett loves.

In fact, we should apply the greatest scrutiny, especially in tech, to network effects businesses with high software margins. Running such businesses comes with a high margin of error given how much cash they generate. An annuity-like profit stream papers over all sorts of operational inefficiency.

If you’re searching for the most well-run company in the world, it’s far more likely to be one with very little competitive advantage, low margins, and fierce competition. Such a company may make for a terrible business, but the fact that it can even exist and survive depends on absolute operational excellence.

One such brutal sector is the airline industry (even Warren Buffett couldn’t take it anymore and dumped his airline holdings in the pandemic). Yet, if any airline comes out of this pandemic with solid margins and increased market share, you might want to take a look at what they’re doing right. Given the unionized labor and commodity-like offering of your typical airline, it’s a miracle any of them eke out any profit. Most do not.

In the tech world, turning a low margin retail business like Amazon.com into an enduring giant is playing the entrepreneurial game on a higher difficulty setting than many of the high margin network effects businesses that are effective monopolies in winner-take-all categories. I’m not saying those network-effects monopolies (you can guess which ones fall into that category) are necessarily poorly run, but if they were, could you even tell?

[BTW, if you want to measure the margins of a private business, one clue is to look at whether they have a snack room for employees, and if they do, how expensive the offerings are. Over a long enough period, the quality of employee perks and the size of a company’s margins tend to converge.

My corollary to this is that you can tell how much a services firm is overcharging you by the cost of the thank-you gift they send you at the conclusion of the engagement.]

It’s clear now that there are multiple levels of institutional failure and breakdown in the United States. At some point, as the pandemic spread across the country, it was impossible not to look on with utter horror at how utterly impotent our national response was and still is. As Marc Andreessen wrote, “As I write this, New York City has put out a desperate call for rain ponchos to be used as medical gowns. Rain ponchos! In 2020! In America!” It was the pandemic equivalent of Jim Mora’s “Playoffs?!” rant, just with ponchos.

The thing is, the United States has been running for a long time in a degraded state. A friend of mine loves to lament that he felt like he moved to America just as it entered the stage of Roman Empire in decline.

Systems sustain themselves in a degraded state until a sufficiently strong stressor breaks them, overwhelming all those duct-taped solutions and jerry-rigged ramparts. For companies, often that stressor is an aggressive, superior competitor. For American institutional competence and industrial capacity, that stressor was SARS-CoV-2.

It’s now May and we are still short PPE for many of our essential frontline workers, and Covid-19 tests are still being rationed. Companies and individuals are fighting through overloaded systems to try to redeem government aid. We have no national method to even wire money to those who qualify.

America has thrown an area rug over a lot of the cracks in its foundation for a long time. But patches in a complex system are meant to handle normal levels of turbulence. This pandemic is not that. We can grumble about tying healthcare to employment but do nothing about it when unemployment is low, but when a pandemic puts tens of millions of people out of work in a month, we are left naked with our failure.

False Floors

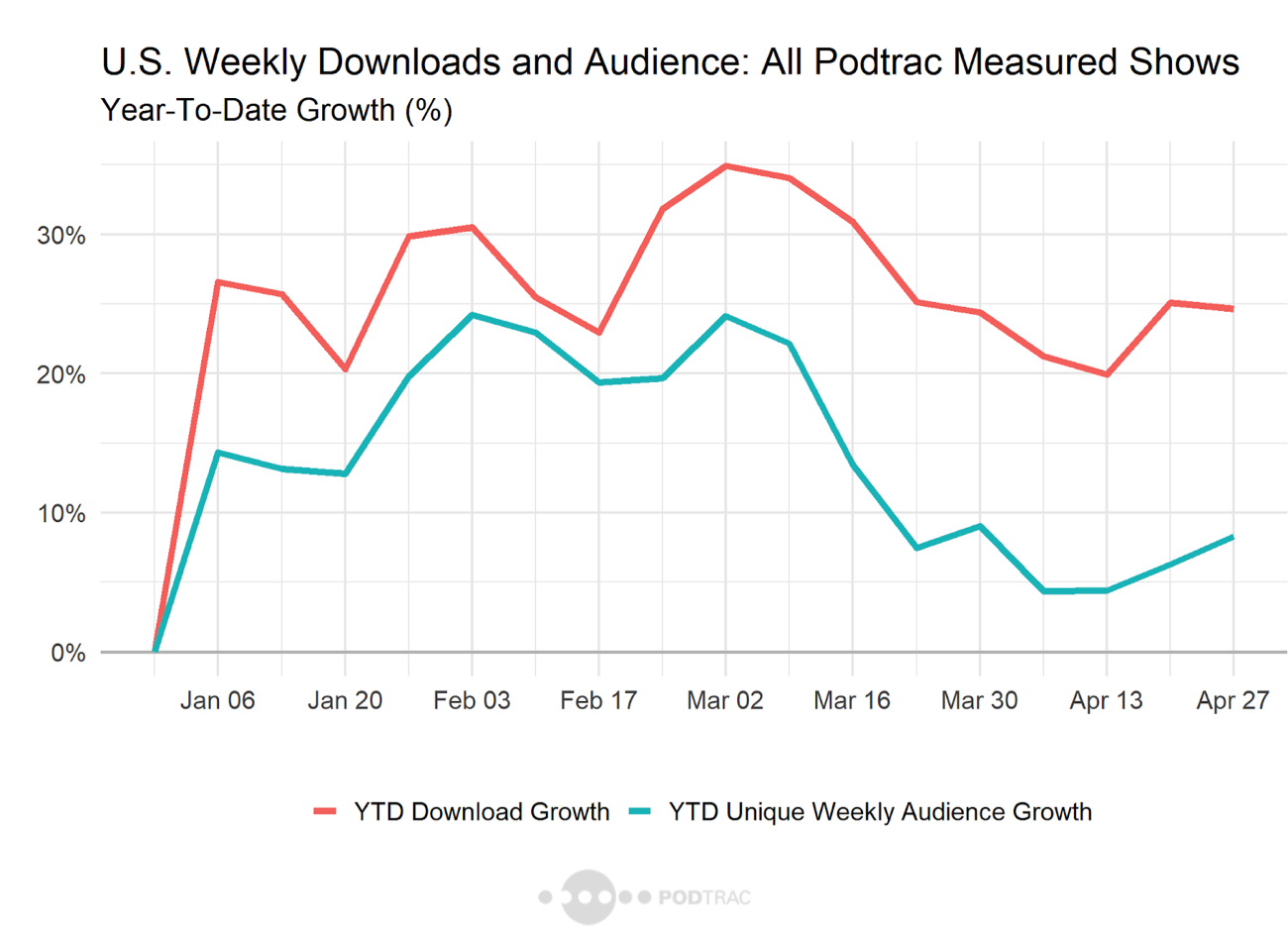

Last issue I wrote about some reasons why music streaming might be down during the lockdown, and since then some stats have come out showing that podcast listening slowed.

|

Some highlights from Podtrac on April 2020:

All top 20 publishers saw a decrease in US monthly Audience in April over March.

The average Unique Monthly Audience for the top 10 publishers decreased by 15% from March while it increased by 11% from April of 2019.

Global Unique Streams & Downloads decreased for the top 10 publishers by 6% over March and increase by 20% over April 2020.

As I noted in the last issue, the addressable market is rarely defined by any single metric. Just because people have more minutes at home now doesn’t mean all those minutes are created equal. For many of those people, time at home includes many distractions and obligations, like kids. The recreational options also proliferate at home; you can watch TV, play on a video game console, read books and magazines, fiddle around on your phone or tablet, and so on.

If I’m sitting in traffic during my work commute and have to focus on the road, my alternatives are limited. Streaming music or listening to a podcast sounds much better than just staring at the bumper of the car in front of me in silence while driving. They’re perfect for consuming while doing household chores or working out.

Your psychological cues and habits also vary in each of those contexts, but ultimately, podcasts are an ideal form of entertainment in any environment like driving when your body and eyes are fully occupied.

This really matters if you’re launching some new product or service and attempting to define the market opportunity. Take, for example, the streaming service Quibi. The name derives from “quick bites,” and they’ve consistently positioned themselves as streaming videos that can be consumed in short chunks, with chapters running from 5 to 10 minutes, for those moments when you have a small attentional crevice in your schedule.

I have many friends who work there, and I wish them all the tailwinds (though not a pandemic). Launching a new streaming service in this competitive entertainment market takes real bravado.

However, one of the reasons I’m bearish is that I don’t think people consume episodic narrative video content in scattered 5 to 10 minute windows throughout the day. They may watch an episode of The Office over lunch, and they may watch a short standalone YouTube video that a friend texts to them between chores at home, or scroll through a series of short videos on TikTok or Instagram, but a 10-minute episode of a longer narrative? That’s, to me, a rare and non-standard behavior.

To clear your mental cache, then spin up enough mental energy to reload the previous chapters into that cache and then follow along with the storyline during a 10-minute break in your day, is not an insignificant request even if the time commitment is low in absolute terms. When I’m in line at the grocery, waiting for the bus, or even just sitting down for a minute or two between chasing my nieces around, the types of content I prefer are bite-sized, but they are also low on narrative payload.

Social media is optimized for such gaps in time. You can consume many units of content from Instagram, Facebook, Twitter, TikTok, in the span of a few minutes. Doing so provides almost an illusion of productivity, even if it’s the intellectual equivalent of being pelted in the face by a series of packing peanuts.

When I watch video of sufficient narrative complexity (I’m not even referring to something as convoluted as Westworld), I usually set aside time for it, at least enough to digest at least one episode. Today’s serial television series are a magnitude of order more complex than in the pre-internet, pre-on-demand era.

To be fair, not all Quibi content is of linear narrative form. Some are meant to be consumed in stand-alone episodes. Still, Quibi is squeezed.

If it steers towards the lower end of the narrative complexity space, it puts them in competition with the near-infinite supply of similar content from social networks, minus the addictive status incentives and social gawking those offer, not to mention the infinite supply of time-killing content on YouTube. If it steers towards higher narrative complexity serials, it faces off against much better capitalized giants with substantially larger catalogs of stories with already onboarded fanbases, like Netflix, HBO, Disney Plus, Amazon Prime Video, and so forth.

In a post-launch interview, CEO Meg Whitman noted they are prioritizing a TV-streaming version of their app in the wake of their launch. That strikes me as odd for a few reasons. One is that you don’t usually turn on your television to watch a short video if you have a small gap of time in your day, not when your phone or tablet is available. After all, watching shorter form video was the entire basis of their thesis.

Television viewing audiences also skew older than phone video viewers. Are older audiences going to choose Quibi over more familiar formats they’ve been watching their entire life like hour-long dramas, half-hour sitcoms, and full-length films, of which Netflix, HBO, Amazon Prime Video, and Disney Plus have massive catalogs?

Nothing is locked in stone, of course. It’s only been about a month since launch, and they can adjust their programming based on market feedback. However, the types of content they are making aren’t the types of things that can be turned around in a day or a week, especially not the scripted narrative content. They are targeting premium short-form video, and that, unlike UGC, takes time to produce and edit. So their reaction time is inherently slower.

Premium content also costs more per minute, reducing their total shots on goal. Even if you assume that all $1.75B of the money they raised goes towards content production, at estimated costs of $100,000/minute for their productions (lower than a Hollywood blockbuster though obviously much higher than for largely UGC-driven video networks like YouTube or TikTok), that only buys then 292 hours of content.

I’m over-simplifying the problem; succeeding as a streaming service is much more of a multivariate problem than this implies. Still, the broader point holds: carving out market share of the human mind requires understanding how it allocates its energy, and that isn’t as simple a model as many pitch decks would have you believe.

There are different types of entrepreneurial risk in Silicon Valley. One is timing risk. I’m sympathetic to that; most good ideas in tech have been tried many times before until finally one day a particular set of changes in the environment make it the right time for that particular idea to get traction.

A wholly different class of risk is forced behavioral change. It can happen, but the assumptions are more fraught. Behavioral rigidity in humans is a very real phenomenon; human nature evolves at glacial speeds. The risk with Quibi is that they aren’t the first mover in a new category (premium short-form video) but a late and much less well-capitalized mover in an old, highly competitive market.

Gift 3-month subscription to The Browser

I have about 50 gift 3-month subscriptions to The Browser to give away. If you’d like one, email me your name and email address (or leave it in a comment here, I’ll leave it unpublished so no one else sees it).